UC Berkeley Capstone Project

Search & Rescue Robot

what is currently happening?

The urgency of the first 24 hours after an earthquake forces first responders to operate in unstable buildings during ongoing aftershocks, resulting in elevated injury risk.

65% of aftershocks happened within the first 24 hours of an earthquake

+

The survival rate of trapped survivor drops by 30% after the first 24 hours

=

46% of first responders are injured after a major earthquake

what does the survivors need?

-

In many post-earthquake rescues, survivors must receive first aid before evacuation, particularly when they are trapped or access routes are blocked by debris. Some life-threatening conditions, such as external bleeding, are highly treatable with simple tools and guidance and can often be managed by conscious survivors themselves. Providing early on-site care for these solvable problems can prevent deterioration while extraction is delayed.

-

Survivors trapped after an earthquake often experience severe psychological stress, fear, and uncertainty about whether they will be found. This mental distress can reduce their ability to remain calm, follow instructions, or conserve energy, negatively affecting survival. Knowing they have been detected and having someone to communicate with can provide reassurance, improve cooperation, and increase the likelihood of survival while rescue is underway.

-

When a safe path becomes available, survivors need clear guidance to reach the exit. This reduces unnecessary movement and lowers the risk of disturbing unstable structures.

What if there is a way to achieve all of the needs without the risks?

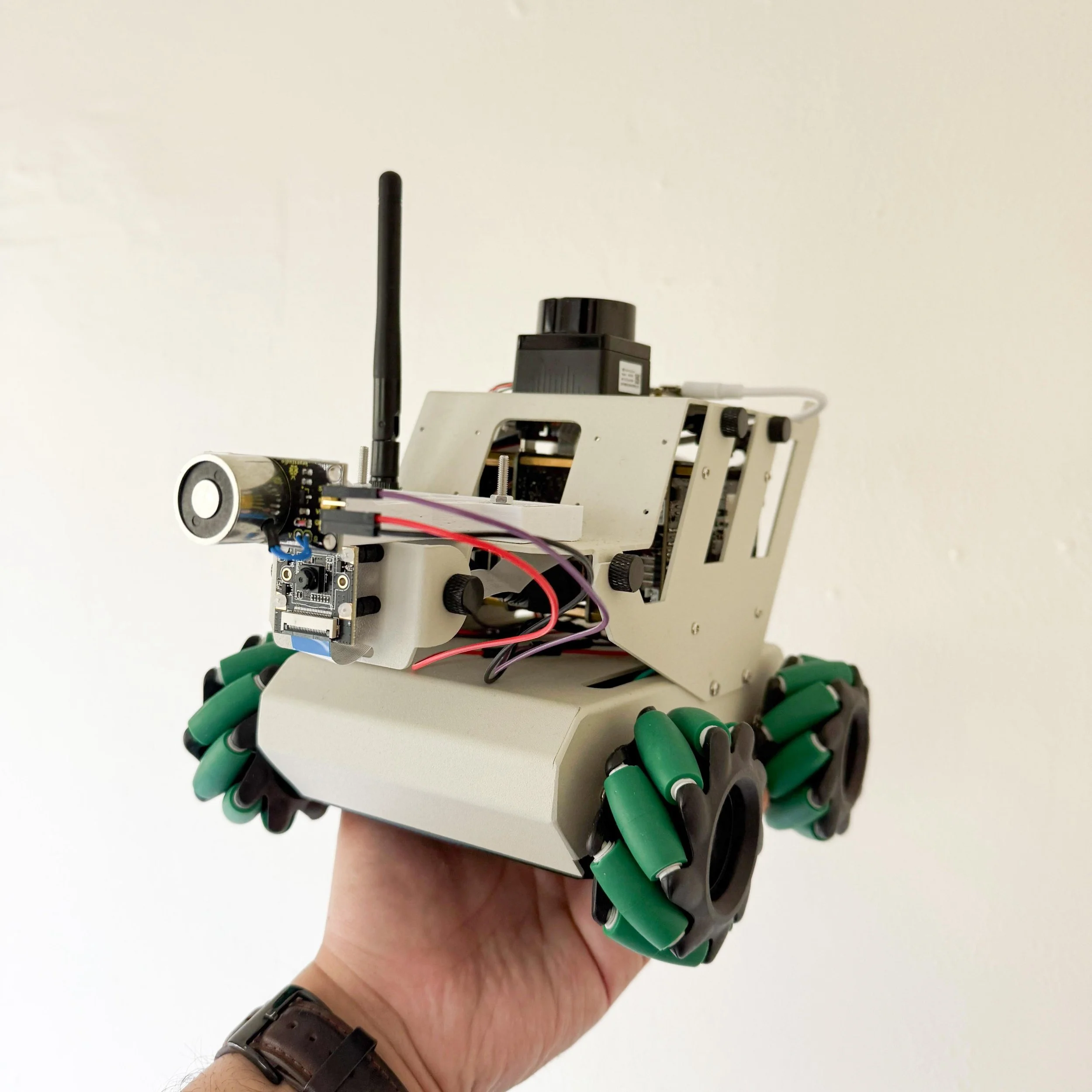

Big Impact in Small Packages

Our solution uses a compact robotic platform that can safely enter damaged buildings to support search and rescue operations. It can help locate survivors, communicate with them, and deliver basic first aid supplies, reducing the need for responders to enter hazardous environments before conditions are understood. The system can also provide information about interior conditions and survivor locations to support safer and more informed rescue decisions.

-

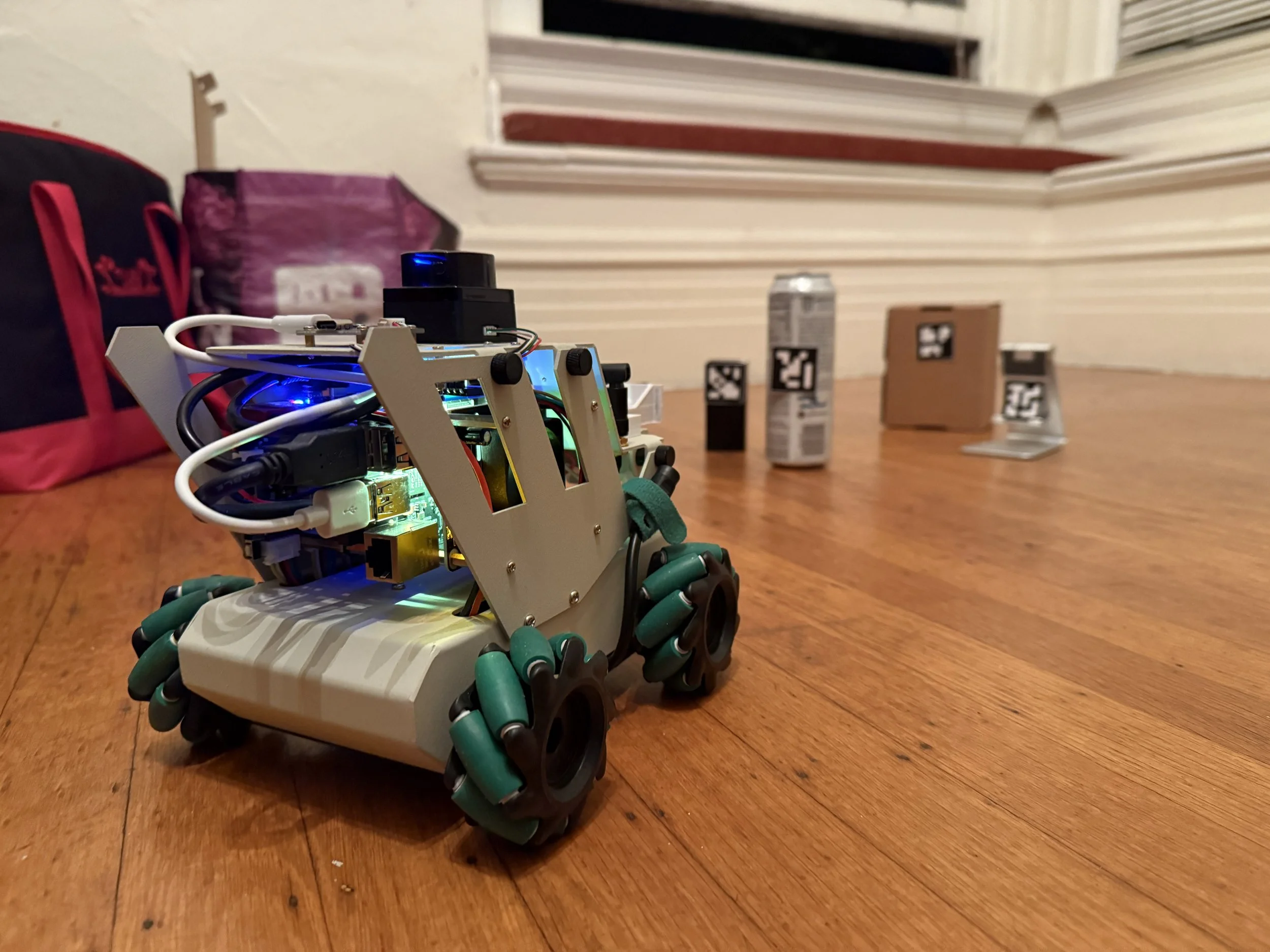

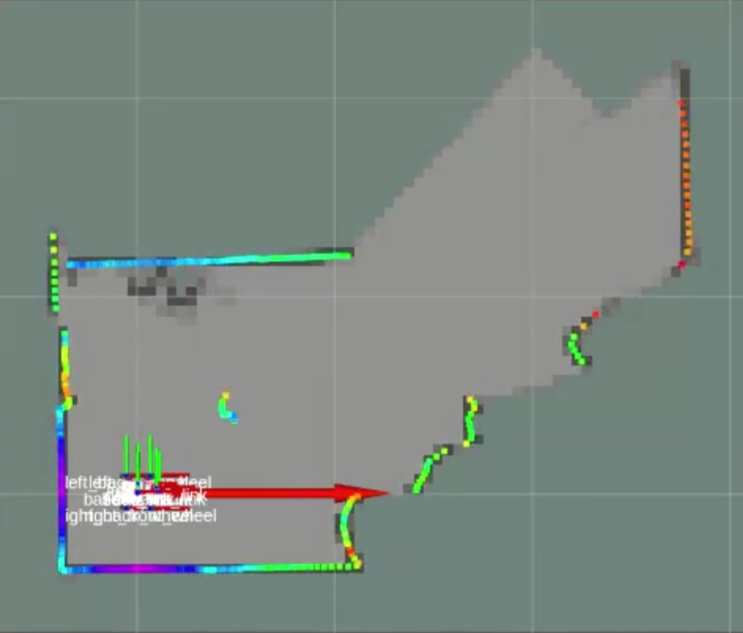

Mapping

The LiDAR allows the system to understand the surrounding environment. With this information, the robot can determine the layout of the room as well as know where the robot is within that space.

-

Navigation

With mapping, the robot can patrol the area, navigating while updating its understanding of the environment. Using a wireless connection, operators can control the robot through a live camera footage to assess and guide next actions.

-

Image Detection

The front-facing camera is used to detect survivors, providing information about who they are and where they’re located. In our prototype, we use identifiable tags to simulate survivor detection and simplify image recognition.

-

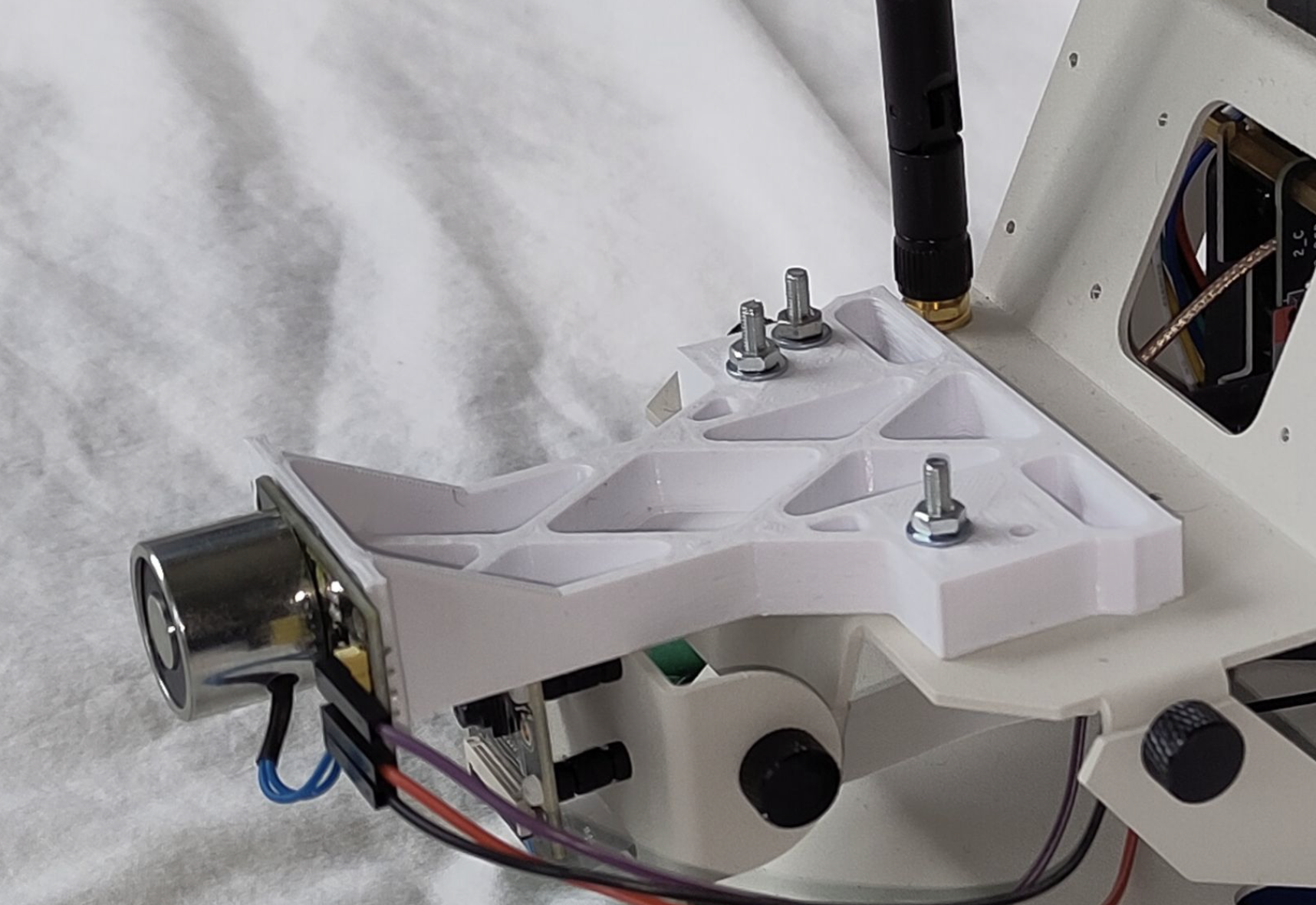

Pick Up Tool

Using a custom electromagnetic arm, the robot can carry aid supplies and transport them directly to survivors. For this proof of concept, magnetic attachments are used to allow simple and reliable pickup and release of the aid packages.

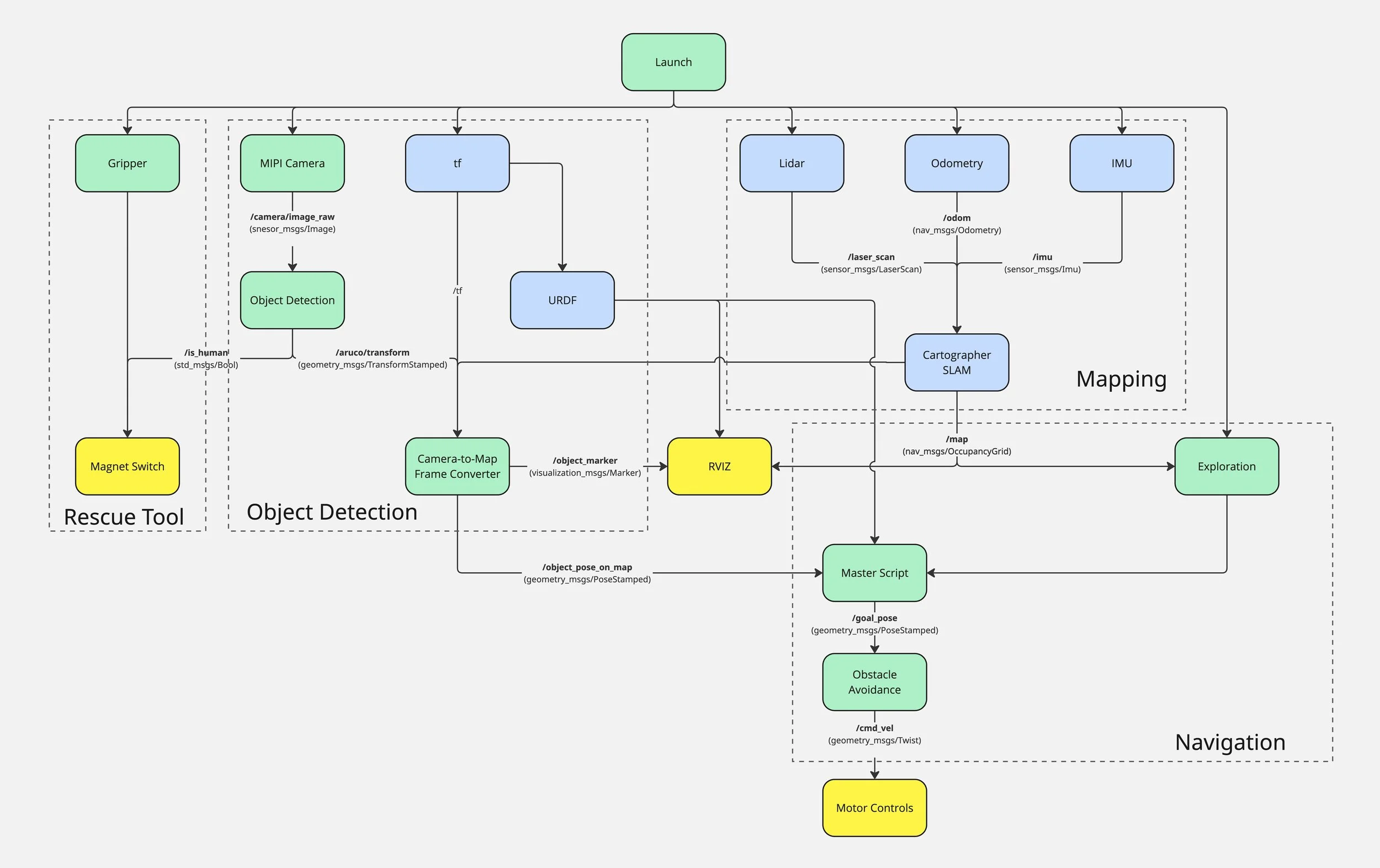

Full Robotic Software Architecture

ROS2 Foxy in Ubuntu Linux 20.04

Software Stack

ROS2 Jazzy

Ubuntu Linux

Navigation2

Cartographer SLAM

OpenCV

Python3

RDK X3

A* Pathfinding